Let’s be honest—AI models are smart, but they can also be wildly overconfident. Ever had ChatGPT confidently tell you that tomatoes are vegetables, only to backtrack when you call it out? Yeah, that’s it. Large Language Models (LLMs) create responses based on the material they were trained on, but they are not well-versed (“know”) with anything after their training. They can be stale, missing proprietary data sources, and quite literally just lied at points (We mean, “AI hallucination,” comes to notice here).

This is where Retrieval-Augmented Generation (RAG) comes in – it is that magic bullet that enables AI intelligent systems to stop uttering total rubbish with utmost confidence, because now information can be fetched when needed. This is like giving your AI a library card instead of letting them rely on whatever they choose to “remember”.

In this blog we take a look at Advanced RAG techniques, how they make AI smarter with a real world example, crafted in a way that will appeal to even the most non-technologist.

Understanding RAG – A Quick Reminder

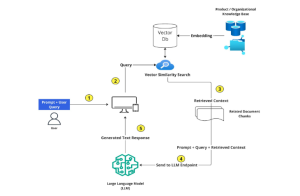

At its root, Retrieval-Augmented Generation (RAG) combines two superpowers of utmost importance to the world today:

- Retrieval: The ability to Retrieve relevant current information from databases, documents, or APIs.

- Generation: Using LLMs to generate responses based on the information retrieved.

How Basic RAG Works?

- User Query: You ask something (e.g., “What’s the latest tax regulation for freelancers?”).

- Retrieval: The system searches for the most relevant documents (hopefully not from 2015).

- Augmented Prompting: These documents are added to the LLM’s input.

- Response Generation: The AI now crafts an answer using actual facts, instead of just making stuff up.

This is already a big step up from standard LLMs, but we can do better. Let’s talk about Advanced RAG—because why settle for “good” when we can have “mind-blowingly smart”?

Advanced RAG – How to Make AI Even Smarter?

1. Multi-Hop Retrieval

Problem: Basic RAG often retrieves one document and calls it a day. But real-world questions need deeper context.

Solution: Multi-hop retrieval enables AI to retrieve information iteratively, connecting different sources for a more comprehensive answer.

Example: Imagine a legal AI assistant answering, “What’s the impact of the new labor law on gig workers?” It first pulls the latest legislation, then fetches interpretations from legal experts, and only then does it generate an answer. This way, it doesn’t just spit out a single document—it pieces together a complete response.

2. Hybrid Retrieval (Because AI Shouldn’t Be Lazy)

Problem: Some documents have exact keyword matches, while others hold semantically similar but differently phrased information. Basic RAG often picks one method, missing out on valuable data.

Solution: Hybrid retrieval uses both:

- Sparse retrieval (BM25, TF-IDF): Finds exact keyword matches.

- Dense retrieval (Vector Embeddings): Captures similar meanings even if phrased differently.

This ensures AI doesn’t just grab the first thing it sees—it actually thinks before answering.

3. Memory-Augmented RAG (So AI Remembers Stuff for More Than 10 Seconds)

Problem: AI treats every query like a first date—zero memory of past interactions. Annoying, right?

Solution: Memory-augmented RAG lets AI recall past conversations, maintaining context across multiple queries.

Example: If you ask a banking chatbot about loan eligibility and later follow up with “What documents do I need?”, it should remember your loan type instead of making you repeat yourself like a broken record.

4. Active Learning for Real-Time Updates (No More Outdated Nonsense)

Problem: Static knowledge bases become outdated (hello, ever-changing tax laws and compliance policies).

Solution: Active learning lets AI continuously update its retrieval sources, ensuring responses are always based on the latest information. It does this by:

- Prioritizing frequently accessed or updated documents.

- Flagging outdated responses for human verification.

- Dynamically ingesting new data.

Basically, AI finally learns to keep up with reality instead of pretending 2020 never ends.

5. Smart Chunking & Query Rewriting (No More Broken Contexts)

Problem: Standard chunking splits documents into random pieces, often losing context.

Solution: Semantic-aware chunking ensures related information stays together, and query rewriting refines user inputs for better retrieval.

Example: Instead of retrieving a single paragraph about interest rates, a banking AI pulls the whole section covering eligibility, repayment options, and fine print. You know, actual helpful information.

Real-World Use Case – AI-Powered Customer Support for Banking

Scenario:

A bank wants an AI chatbot that accurately answers customer queries about loan eligibility, transactions, and security policies. But banking regulations change constantly, and the AI can’t afford to hallucinate its way into a lawsuit.

Solution: Advanced RAG to the Rescue

- Hybrid Retrieval: Sparse search fetches exact regulations, while dense retrieval understands fuzzy user queries like “What’s a good credit score for a home loan?”

- Multi-Hop Retrieval: The AI fetches loan policies, customer eligibility records, and interest rate trends before generating a response.

- Memory-Augmented RAG: If a customer asks about loans today and credit cards next week, the AI remembers their financial profile.

- Active Learning: Banking regulations change? The AI flags outdated information and self-updates.

- Semantic Chunking: Instead of retrieving scattered snippets, it pulls entire, contextually relevant sections.

The Future of Advanced RAG

There is a chance that the future of RAG may include the following:

- No More AI Hallucinations: Every response is backed by real, up-to-date knowledge.

- Better Customer Experience: Personalized and consistent responses.

- Regulatory Compliance: AI doesn’t get the institution sued (always a plus).

- Distinct Retrievals: An AI capable of learning and adapting to the preferences of a particular user.

- Federated RAG Systems: Safe retrieval from different databases that are distributed (ideal for sensitive sectors such as health and finance).

- Neural-Symbolic Integration: AI which applies deep learning along with reasoning within computer science, (so it actually thinks instead of just text predicting).

End to End Technology Solutions

End to End Technology Solutions